Cyberdolia

Chihuahuas, Woodgrain Patterns, Cupcakes and Machine Vision

Essay, Moscow Art Magazine №127, 2025

The popular meme among tech-geeks, juxtaposing a chihuahua and a muffin, vividly demonstrates how unsettling, absurd similarities can arise between disparate entities in the field of computer vision.

This is a classic example of how a neural network model, trained to recognize a certain object, may very likely see it where it doesn't exist. We have observed how previous iterations of computer vision models have presented astonishing images: from a plate of spaghetti with meatballs, hallucinating a hellish "landscape" of dog faces in the Deep Dream interpretation, to the impressive modern short-film hallucinations in MPEG-4 format.

While the Chihuahua-muffin meme highlights AI's susceptibility to bias, a parallel can be drawn to human visual perception. Pareidolia, the phenomenon of perceiving familiar shapes, such as dog faces, in the grain of plywood, demonstrates inherent human visual biases. Despite this apparent similarity, the nature of these perceptual errors diverges. In the AI scenario, the model errs due to limited ability to distinguish between visually similar objects. This error could, in theory, be rectified through improved training data, refined architectural parameters, or adjusted weight configurations within the neural network. Conversely, human pareidolia stems from the brain's inherent pattern-seeking and cognitive biases, representing a fundamentally different type of perceptual processing error.

In the case of the plywood, you likely saw a dog's face in the wood grain because of the vast experience with dog faces and their many stylistic variations. The AI, on the other hand, likely failed to differentiate due to insufficient exposure to visual diversity in the training data and a lack of experience in recognizing the nuances of different dog breeds. This lack of visual experience can be a limiting factor for both humans and AI.

The quantity of visual experience, or the number of times one has encountered a specific object, directly impacts pattern recognition and visual acuity. For example, if you've seen many Chinese ideographic symbols (象形), you will likely become more adept at recognizing them and discerning their features. Even if you don't understand the symbol's meaning, you will develop the skill of recognizing it among others, regardless of the font.

Convolutional neural networks (CNNs), designed for image recognition, operate similarly. They learn through a complex chain of internal processes. The architecture of a CNN typically involves multiple layers of convolutional, pooling, and fully connected layers. Each layer of the neural network increases the complexity of the function of looking. The early layers focus on basic features like color and shape. As the data passes through layers, the neural network begins to recognize progressively larger elements, forms, and textures of the object, until, finally, it fully identifies it. The quality of recognition directly depends on the size and quality of the data used to train the model and the number of times those data pass through the model's layers. The quality depends on the training dataset, and the amount of time the model was trained.

The degree of training of the neural network depends on the visual experience: its range — "seeing many carefully selected examples" - or the limitations — "seeing random examples." Returning to the examples above, we can hypothesize that the failures were not just due to the internal structure of the viewer - humans or machines - but also to the external circumstances in which they were trained. For example, regarding the recognition of dogs in the texture of a sheet of plywood, it may be that, in addition to other things, our vision was guided by the instinct of self-preservation, which has historically conditioned the survival of humans as a species - I assume that in the past we had to develop the skill of quickly recognizing representatives of the wild fauna as a protective mechanism. In the case of an error in AI, as in the case of the Chihuahua-muffin meme, it may be that the training data set does not contain sufficient representative visual data. For example, we can assume that the internet contains many more muffin images than images of Chihuahuas, causing a bias in the data set. The training data set may have more muffins than Chihuahuas.

In the case of the plywood, you likely saw a dog's face in the wood grain because of the vast experience with dog faces and their many stylistic variations. The AI, on the other hand, likely failed to differentiate due to insufficient exposure to visual diversity in the training data and a lack of experience in recognizing the nuances of different dog breeds. This lack of visual experience can be a limiting factor for both humans and AI.

The quantity of visual experience, or the number of times one has encountered a specific object, directly impacts pattern recognition and visual acuity. For example, if you've seen many Chinese ideographic symbols (象形), you will likely become more adept at recognizing them and discerning their features. Even if you don't understand the symbol's meaning, you will develop the skill of recognizing it among others, regardless of the font.

Convolutional neural networks (CNNs), designed for image recognition, operate similarly. They learn through a complex chain of internal processes. The architecture of a CNN typically involves multiple layers of convolutional, pooling, and fully connected layers. Each layer of the neural network increases the complexity of the function of looking. The early layers focus on basic features like color and shape. As the data passes through layers, the neural network begins to recognize progressively larger elements, forms, and textures of the object, until, finally, it fully identifies it. The quality of recognition directly depends on the size and quality of the data used to train the model and the number of times those data pass through the model's layers. The quality depends on the training dataset, and the amount of time the model was trained.

The degree of training of the neural network depends on the visual experience: its range — "seeing many carefully selected examples" - or the limitations — "seeing random examples." Returning to the examples above, we can hypothesize that the failures were not just due to the internal structure of the viewer - humans or machines - but also to the external circumstances in which they were trained. For example, regarding the recognition of dogs in the texture of a sheet of plywood, it may be that, in addition to other things, our vision was guided by the instinct of self-preservation, which has historically conditioned the survival of humans as a species - I assume that in the past we had to develop the skill of quickly recognizing representatives of the wild fauna as a protective mechanism. In the case of an error in AI, as in the case of the Chihuahua-muffin meme, it may be that the training data set does not contain sufficient representative visual data. For example, we can assume that the internet contains many more muffin images than images of Chihuahuas, causing a bias in the data set. The training data set may have more muffins than Chihuahuas.

Hashterms

A speculative concept describing an emergent aesthetic system that arises from autonomous processes rather than from direct human intention. The term combines auto- (self, autonomous) with aesthetics, suggesting forms of perception, judgment, or sensibility generated by machines, algorithms, or self-organizing systems.

Refers to the interdisciplinary study of engineering systems of knowledge, encompassing its nature, sources, and limits. It draws from the term epistemics.

While pareidolia is the tendency to perceive meaningful images or patterns where none actually exist. Cyberdolia is a similar misinterpretation occurring in machine vision contexts.

Chihuahuas and muffins: the internet meme that perfectly illustrates the bias issues in AI models.

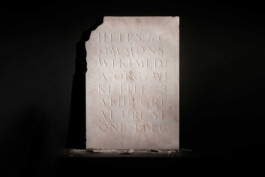

Dog faces in wood grain; a curated selection from Reddit forum users.

Latent Spaces and Domain Ontologies

The architecture of the latent space plays a crucial role in organizing the experiential knowledge of a neural network. The latent space, also known as the "space of hidden objects" or "space of embedding," is a mathematical model in which all possible images are represented as points, each corresponding to a unique set of characteristics of a given object, with similar objects placed close to each other. The depth of the latent space is determined by the model's ability to visualize or recognize the diversity of the objects it has learned.

To better understand this, let's consider a forest consisting of the most diverse trees. If an AI model has carefully studied each tree in this forest, it can virtually model the forest in such a way that similar trees are placed closer together, forming topologies of smoothly changing forms. For example, trees with fewer branches on one side or none at all will be located in the northern part, while dense and evenly branching trees will be in the southern part. And so on, following the principle of similarity - breeds, trunk structures, leaf colors, and many other features. What a truly dystopian forest it would be in reality!

Generative models like text-to-image, trained on a dataset of images of everything that can be depicted, such as Stable Diffusion, can be easily forced to synthesize a neighboring image between a "Chihuahua-muffin." In this case, the text query would be converted to a coordinate located between the orbits of both names in the latent space. This space is, in a sense, a cloud of knowledge of the model, containing everything the model has seen and everything it can draw based on mixing of all it has seen.

By addressing AI for creating hybrid interpretations of objects or even entire concepts, we violate the very essence of metaphysics by mixing fundamental ontological categories.

Since the mid-1970s, researchers in artificial intelligence have recognized that the process of engineering knowledge is key to creating large and powerful AI systems. Scholars claimed they could create new ontologies as computational models that enable automated reasoning. In the 1980s, the term "ontology" became used to denote both the theory of world modeling and knowledge system organization. As derivatives of the corresponding philosophical concept, computational ontologies have become a kind of applied philosophy.

Computational ontologies differ from philosophy in that they are created with specific goals and evaluated more in terms of applicability than completeness. Striving for classification and explanation of entities, they contain the idea of a universal vocabulary, definitions of concepts, and relationships between them. Tom Gruber, an American computer scientist known for his foundational work in ontology engineering in the context of AI, wrote in an 1993 article: "For models of knowledge organization, what 'exists' is precisely what can be represented." In other words, in information models of computer systems, the very vocabulary of represented concepts determines their existence. A computational ontology functions both as a database and as a structure of organizing information; it not only deals with the study of the nature of being, like a branch of philosophy, but is a real architecture that largely governs and organizes knowledge, its logistics, and the emergence of meanings. For example, ontology architectures of computer systems rely on entities such as files, paths, hypertext, links, classes, metadata, ascending and descending orders, access hierarchies, file systems, variables, and expansions, executable files, and much more; these are devices and elements forming the anatomy of the thinking architecture of AI.

If our concern with the philosophy of language has helped us understand the correlation between language, meaning, knowledge, perception, and the world, we might need to explore how applied ontologies affect all this. What are the scales of this influence on how we acquire and organize experience, make decisions, and what impact does it have outside of us, in the external world? Many modern computational developments in machine learning were created as means of automating information work and some became means of knowledge production themselves. Epistemology of AI models has a special quality, well-described by a single word: "programmability." These systems possess algorithmic awareness and enable generating information based on digital models of knowledge, a phenomenon in itself that represents interesting generative epistemologies.

Returning to visual images, we ask: What is the difference between an image of an object generated by an AI model, created and trained to generate hundreds of hyper-realistic images per second, and a random photograph of the same object, for example, obtained by searching on Google? Or is it a representation of the same object in our collective or individual memory? And can any of these generated representations be ontologically more correct, and therefore more real, than others? A question similar to that posed in Joseph Kosuth's landmark work of 1965, "One and Three Chairs," where he put the forms of representation of objects to the test.

It is essential to explore the impact of applied ontologies on the acquisition, organization of knowledge, decision-making, and its impact on the world beyond us.

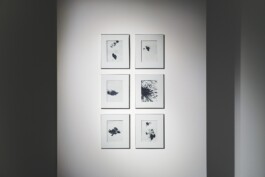

Ègor Kraft, 1&∞🪑 (2023), view of the video installation displaying frames from the video. The full version of the film can be seen at vimeo.com/egorkraft/chair.

Echo Chambers, One and Infinite Number of Chairs

"One and Three Chairs" - Perhaps the Most Cited Example of Conceptual Art of the Late 20th Century. This work embodies a number of characteristics that define conceptual art in general. Art that prioritizes the concept above form and content is associated with the dematerialization of art.

Kosuth’s work consists of three different presentations of a chair as an object: the chair itself, its photograph, and a description—a copy of a dictionary entry. The style of the chair, the material from which it is made, and other physical characteristics are not essential in this case, meaning that replacing one chair with another does not change the idea of the work. Moreover, according to the artist’s concept, the chair and, accordingly, its photograph must be new in each subsequent exhibition. The only constant elements are the copy of the dictionary entry and the installation setup scheme.

The self-referential nature of the work prompts its consideration within various philosophical exercises, for instance: What does the concept of a chair include? How does this concept relate to the image of a chair? How is the function of a chair defined within the notion of what it is? How can language, art, and ontological categories be manifested in physical reality? What is the relationship of this work to Plato’s theory of forms? One may recall the analytic philosopher Ludwig Wittgenstein, according to whose philosophy language, as a means of representation, plays a central role in understanding the world, while at the same time, the empiricist David Hume, who denied the existence of innate ideas, argued that new knowledge is the result of sensory data and repeated experience. And, of course, Immanuel Kant, with his Critique of Pure Reason, in which he reflects on how the physical form of a chair corresponds to our knowledge of it and how this knowledge can be applied by us.

Our expectation of seeing a work of art as an end in itself is an expectation of an object that stands apart from the world of objects. But instead, we are presented with an almost bare concept of an extremely mundane item—an ordinary chair. The artist is not so necessary here, as the very act of assembling the work is more the curator’s task. In this case, the curator is organizing representations of chairs, just as a text-to-image neural network organizes representations of objects.

Alvin Lucier, an American experimental composer and sound artist, in 1969, in the electronic music studio at Brandeis University, recorded I Am Sitting in a Room, which became one of the most iconic works of the genre. In this piece, Lucier speaks a text and records the sound of his voice on a tape recorder. He then plays back this recording and records it again through a microphone connected to a recording and playback device. The new recording is played back and recorded again. This process is repeated until, through re-recording, the words become completely indistinguishable, replaced by acoustic distortions of the sound frequencies characteristic of the space where the recording is made. The text, consisting of several sentences spoken by the author, describes the entire process of the sound installation, beginning with the words: “I am sitting in a room different from the one you are in now. I am recording the sound of my speaking voice…” and goes on to predict what will eventually happen to the recording of his voice in this act of repetition.

Thus, perhaps the observations outlined in this text lead to the following thesis: today, the complex architectures of planetary-scale computer systems and the equally complex algorithms governing interactions with the traces of collective knowledge stored within them—where the apex of this project is artificial intelligence—function not just as means of access (medium) but also as institutions of meaning and image production. The computational fusion of information into meaning, the synthetic cognitive capabilities of artificial intelligence, and the logistics of planetary interconnectedness not only change the way knowledge is produced and how those engaged with it interact but also influence the very fundamental ontology of concepts—what is what.

However, as an artist first and foremost, I would like to exercise the validity of this idea through my own work, curiously titled 1&∞🪑.

Joseph Kosuth, "One and Three Chairs," 1965 © 2024 Joseph Kosuth / Artists Rights Society (ARS), New York, Courtesy the artist and Sean Kelly Gallery, New York.

1&∞🪑

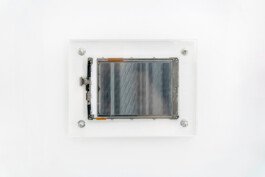

In this experiment, aimed at generating several hundred images of chairs, I turned to the popular text-to-image AI model, Stable Diffusion. The prompt used was: “one chair on a neutral background.” The resulting set of predominantly photorealistic images was then used for fine-tuning the same model, thereby predictably enhancing its ability to reproduce “a chair on a neutral background” across the entire reproducible diversity of this image.

This process of retraining the model on its own AI-generated images was repeated over and over—until, by the sixth iteration, instead of the photorealistic depiction of a chair that the neural network had so masterfully generated in the first phase, the model had degraded to such an extent that it produced only bright, colorful blotches bearing no resemblance to any image of a chair, instead resembling an awkward digital imitation of Mark Rothko’s paintings. In other words, the process continued until the figurative image of the chair had completely disappeared from the AI model’s representation of it.

It did not take long for the advanced Stable Diffusion model—widely regarded today as a triumphant product of applied computer vision engineering—to forget the trivial image of a chair through the process of studying its own interpretations of it.

In data science, the phenomenon in which AI is trained on data generated by other AI models is often referred to as data cannibalism. Due to the increasing demand for expanding datasets and the growing prevalence of AI-generated images and data, more and more new artificial intelligence systems will be trained on synthetic datasets—those synthesized by other generative AI models. This phenomenon introduces complications in this applied ontology and contaminates future datasets, impacting the epistemological and visual accuracy of models.

Could our AI models, as a result of this ongoing and accelerating synthesis of all data, ultimately lose their primary function of precise representation? Or, at the very least, could they blur the accuracy gained from studying representations of real objects by diluting it with the synthetic noise of perceptual degradation? The 1&∞🪑 experiment demonstrated how, under the influence of such feedback loops and the effect of algorithmic echo chambers of self-generated and self-consumed data, the domain ontology of an object and its visual representation disintegrate into non-figurative abstraction. At least, that’s how it appears—to the human eye.

However, is the image of the chair still visible to the machine in its final phase, where we can no longer see it? As observed in examples involving dog faces, plywood sheets, wood grain patterns, and muffins, both humans and machines can either perceive something that isn’t actually represented or fail to recognize what is plainly visible. And yet, does the machine still see a chair within the applied ontology of computational systems?

This is a crucial question because if the machine does see it, then, according to the structure of its applied ontology, it exists. Moreover, the chair becomes an executable object—like a .exe file, a line of code, or a prompt request. According to Tom Gruber, if something is represented, then it “exists.” This suggests that in an era of pervasive industrialization and planetary-scale computation, such failures can have tangible, real-world consequences beyond the illusory nature of their non-objective form.

Film One & Infinite Chairs

REFERENCES

- Gruber, T. R. A Translation Approach to Portable Ontology Specifications, 1993. URL: https://www.sci-hub.ru/10.1006/knac.1993.1008?ysclid=m2f3getglh617102346.

- Full text: “I am sitting in a room different from the one you are in now. I am recording the sound of my speaking voice and I am going to play it back into the room again and again until the resonant frequencies of the room reinforce themselves so that any semblance of my speech, with perhaps the exception of rhythm, is destroyed. What you will hear then are the natural resonant frequencies of the room articulated by speech. I regard this activity not so much as a demonstration of a physical fact, but more as a way to smooth out any irregularities my speech might have.” URL: https://www.youtube.com/watch?v=YUIPK8CWxpw&t=1423s.

Cyberdolia

Essay, Moscow Art Magazine №127, 2025

Chihuahuas, Woodgrain Patterns, Cupcakes and Machine Vision

Hashterms

Hashterms

A speculative concept describing an emergent aesthetic system that arises from autonomous processes rather than from direct human intention. The term combines auto- (self, autonomous) with aesthetics, suggesting forms of perception, judgment, or sensibility generated by machines, algorithms, or self-organizing systems.

Refers to the interdisciplinary study of engineering systems of knowledge, encompassing its nature, sources, and limits. It draws from the term epistemics.

While pareidolia is the tendency to perceive meaningful images or patterns where none actually exist. Cyberdolia is a similar misinterpretation occurring in machine vision contexts.

The popular meme among tech-geeks, juxtaposing a chihuahua and a muffin, vividly demonstrates how unsettling, absurd similarities can arise between disparate entities in the field of computer vision.

This is a classic example of how a neural network model, trained to recognize a certain object, may very likely see it where it doesn't exist. We have observed how previous iterations of computer vision models have presented astonishing images: from a plate of spaghetti with meatballs, hallucinating a hellish "landscape" of dog faces in the Deep Dream interpretation, to the impressive modern short-film hallucinations in MPEG-4 format.

While the Chihuahua-muffin meme highlights AI's susceptibility to bias, a parallel can be drawn to human visual perception. Pareidolia, the phenomenon of perceiving familiar shapes, such as dog faces, in the grain of plywood, demonstrates inherent human visual biases. Despite this apparent similarity, the nature of these perceptual errors diverges. In the AI scenario, the model errs due to limited ability to distinguish between visually similar objects. This error could, in theory, be rectified through improved training data, refined architectural parameters, or adjusted weight configurations within the neural network. Conversely, human pareidolia stems from the brain's inherent pattern-seeking and cognitive biases, representing a fundamentally different type of perceptual processing error.

In the case of the plywood, you likely saw a dog's face in the wood grain because of the vast experience with dog faces and their many stylistic variations. The AI, on the other hand, likely failed to differentiate due to insufficient exposure to visual diversity in the training data and a lack of experience in recognizing the nuances of different dog breeds. This lack of visual experience can be a limiting factor for both humans and AI.

The quantity of visual experience, or the number of times one has encountered a specific object, directly impacts pattern recognition and visual acuity. For example, if you've seen many Chinese ideographic symbols (象形), you will likely become more adept at recognizing them and discerning their features. Even if you don't understand the symbol's meaning, you will develop the skill of recognizing it among others, regardless of the font.

Convolutional neural networks (CNNs), designed for image recognition, operate similarly. They learn through a complex chain of internal processes. The architecture of a CNN typically involves multiple layers of convolutional, pooling, and fully connected layers. Each layer of the neural network increases the complexity of the function of looking. The early layers focus on basic features like color and shape. As the data passes through layers, the neural network begins to recognize progressively larger elements, forms, and textures of the object, until, finally, it fully identifies it. The quality of recognition directly depends on the size and quality of the data used to train the model and the number of times those data pass through the model's layers. The quality depends on the training dataset, and the amount of time the model was trained.

The degree of training of the neural network depends on the visual experience: its range — "seeing many carefully selected examples" - or the limitations — "seeing random examples." Returning to the examples above, we can hypothesize that the failures were not just due to the internal structure of the viewer - humans or machines - but also to the external circumstances in which they were trained. For example, regarding the recognition of dogs in the texture of a sheet of plywood, it may be that, in addition to other things, our vision was guided by the instinct of self-preservation, which has historically conditioned the survival of humans as a species - I assume that in the past we had to develop the skill of quickly recognizing representatives of the wild fauna as a protective mechanism. In the case of an error in AI, as in the case of the Chihuahua-muffin meme, it may be that the training data set does not contain sufficient representative visual data. For example, we can assume that the internet contains many more muffin images than images of Chihuahuas, causing a bias in the data set. The training data set may have more muffins than Chihuahuas.

Chihuahuas and muffins: the internet meme that perfectly illustrates the bias issues in AI models.

Dog faces in wood grain; a curated selection from Reddit forum users.

In the case of the plywood, you likely saw a dog's face in the wood grain because of the vast experience with dog faces and their many stylistic variations. The AI, on the other hand, likely failed to differentiate due to insufficient exposure to visual diversity in the training data and a lack of experience in recognizing the nuances of different dog breeds. This lack of visual experience can be a limiting factor for both humans and AI.

The quantity of visual experience, or the number of times one has encountered a specific object, directly impacts pattern recognition and visual acuity. For example, if you've seen many Chinese ideographic symbols (象形), you will likely become more adept at recognizing them and discerning their features. Even if you don't understand the symbol's meaning, you will develop the skill of recognizing it among others, regardless of the font.

Convolutional neural networks (CNNs), designed for image recognition, operate similarly. They learn through a complex chain of internal processes. The architecture of a CNN typically involves multiple layers of convolutional, pooling, and fully connected layers. Each layer of the neural network increases the complexity of the function of looking. The early layers focus on basic features like color and shape. As the data passes through layers, the neural network begins to recognize progressively larger elements, forms, and textures of the object, until, finally, it fully identifies it. The quality of recognition directly depends on the size and quality of the data used to train the model and the number of times those data pass through the model's layers. The quality depends on the training dataset, and the amount of time the model was trained.

The degree of training of the neural network depends on the visual experience: its range — "seeing many carefully selected examples" - or the limitations — "seeing random examples." Returning to the examples above, we can hypothesize that the failures were not just due to the internal structure of the viewer - humans or machines - but also to the external circumstances in which they were trained. For example, regarding the recognition of dogs in the texture of a sheet of plywood, it may be that, in addition to other things, our vision was guided by the instinct of self-preservation, which has historically conditioned the survival of humans as a species - I assume that in the past we had to develop the skill of quickly recognizing representatives of the wild fauna as a protective mechanism. In the case of an error in AI, as in the case of the Chihuahua-muffin meme, it may be that the training data set does not contain sufficient representative visual data. For example, we can assume that the internet contains many more muffin images than images of Chihuahuas, causing a bias in the data set. The training data set may have more muffins than Chihuahuas.

Latent Spaces and Domain Ontologies

The architecture of the latent space plays a crucial role in organizing the experiential knowledge of a neural network. The latent space, also known as the "space of hidden objects" or "space of embedding," is a mathematical model in which all possible images are represented as points, each corresponding to a unique set of characteristics of a given object, with similar objects placed close to each other. The depth of the latent space is determined by the model's ability to visualize or recognize the diversity of the objects it has learned.

To better understand this, let's consider a forest consisting of the most diverse trees. If an AI model has carefully studied each tree in this forest, it can virtually model the forest in such a way that similar trees are placed closer together, forming topologies of smoothly changing forms. For example, trees with fewer branches on one side or none at all will be located in the northern part, while dense and evenly branching trees will be in the southern part. And so on, following the principle of similarity - breeds, trunk structures, leaf colors, and many other features. What a truly dystopian forest it would be in reality!

Generative models like text-to-image, trained on a dataset of images of everything that can be depicted, such as Stable Diffusion, can be easily forced to synthesize a neighboring image between a "Chihuahua-muffin." In this case, the text query would be converted to a coordinate located between the orbits of both names in the latent space. This space is, in a sense, a cloud of knowledge of the model, containing everything the model has seen and everything it can draw based on mixing of all it has seen.

By addressing AI for creating hybrid interpretations of objects or even entire concepts, we violate the very essence of metaphysics by mixing fundamental ontological categories.

Since the mid-1970s, researchers in artificial intelligence have recognized that the process of engineering knowledge is key to creating large and powerful AI systems. Scholars claimed they could create new ontologies as computational models that enable automated reasoning. In the 1980s, the term "ontology" became used to denote both the theory of world modeling and knowledge system organization. As derivatives of the corresponding philosophical concept, computational ontologies have become a kind of applied philosophy.

Computational ontologies differ from philosophy in that they are created with specific goals and evaluated more in terms of applicability than completeness. Striving for classification and explanation of entities, they contain the idea of a universal vocabulary, definitions of concepts, and relationships between them. Tom Gruber, an American computer scientist known for his foundational work in ontology engineering in the context of AI, wrote in an 1993 article: "For models of knowledge organization, what 'exists' is precisely what can be represented." In other words, in information models of computer systems, the very vocabulary of represented concepts determines their existence. A computational ontology functions both as a database and as a structure of organizing information; it not only deals with the study of the nature of being, like a branch of philosophy, but is a real architecture that largely governs and organizes knowledge, its logistics, and the emergence of meanings. For example, ontology architectures of computer systems rely on entities such as files, paths, hypertext, links, classes, metadata, ascending and descending orders, access hierarchies, file systems, variables, and expansions, executable files, and much more; these are devices and elements forming the anatomy of the thinking architecture of AI.

If our concern with the philosophy of language has helped us understand the correlation between language, meaning, knowledge, perception, and the world, we might need to explore how applied ontologies affect all this. What are the scales of this influence on how we acquire and organize experience, make decisions, and what impact does it have outside of us, in the external world? Many modern computational developments in machine learning were created as means of automating information work and some became means of knowledge production themselves. Epistemology of AI models has a special quality, well-described by a single word: "programmability." These systems possess algorithmic awareness and enable generating information based on digital models of knowledge, a phenomenon in itself that represents interesting generative epistemologies.

Returning to visual images, we ask: What is the difference between an image of an object generated by an AI model, created and trained to generate hundreds of hyper-realistic images per second, and a random photograph of the same object, for example, obtained by searching on Google? Or is it a representation of the same object in our collective or individual memory? And can any of these generated representations be ontologically more correct, and therefore more real, than others? A question similar to that posed in Joseph Kosuth's landmark work of 1965, "One and Three Chairs," where he put the forms of representation of objects to the test.

It is essential to explore the impact of applied ontologies on the acquisition, organization of knowledge, decision-making, and its impact on the world beyond us.

Ègor Kraft, 1&∞🪑 (2023), view of the video installation displaying frames from the video. The full version of the film can be seen at vimeo.com/egorkraft/chair.

Echo Chambers, One and Infinite Number of Chairs

"One and Three Chairs" - Perhaps the Most Cited Example of Conceptual Art of the Late 20th Century. This work embodies a number of characteristics that define conceptual art in general. Art that prioritizes the concept above form and content is associated with the dematerialization of art.

Joseph Kosuth, "One and Three Chairs," 1965 © 2024 Joseph Kosuth / Artists Rights Society (ARS), New York, Courtesy the artist and Sean Kelly Gallery, New York.

Kosuth’s work consists of three different presentations of a chair as an object: the chair itself, its photograph, and a description—a copy of a dictionary entry. The style of the chair, the material from which it is made, and other physical characteristics are not essential in this case, meaning that replacing one chair with another does not change the idea of the work. Moreover, according to the artist’s concept, the chair and, accordingly, its photograph must be new in each subsequent exhibition. The only constant elements are the copy of the dictionary entry and the installation setup scheme.

The self-referential nature of the work prompts its consideration within various philosophical exercises, for instance: What does the concept of a chair include? How does this concept relate to the image of a chair? How is the function of a chair defined within the notion of what it is? How can language, art, and ontological categories be manifested in physical reality? What is the relationship of this work to Plato’s theory of forms? One may recall the analytic philosopher Ludwig Wittgenstein, according to whose philosophy language, as a means of representation, plays a central role in understanding the world, while at the same time, the empiricist David Hume, who denied the existence of innate ideas, argued that new knowledge is the result of sensory data and repeated experience. And, of course, Immanuel Kant, with his Critique of Pure Reason, in which he reflects on how the physical form of a chair corresponds to our knowledge of it and how this knowledge can be applied by us.

Our expectation of seeing a work of art as an end in itself is an expectation of an object that stands apart from the world of objects. But instead, we are presented with an almost bare concept of an extremely mundane item—an ordinary chair. The artist is not so necessary here, as the very act of assembling the work is more the curator’s task. In this case, the curator is organizing representations of chairs, just as a text-to-image neural network organizes representations of objects.

Alvin Lucier, an American experimental composer and sound artist, in 1969, in the electronic music studio at Brandeis University, recorded I Am Sitting in a Room, which became one of the most iconic works of the genre. In this piece, Lucier speaks a text and records the sound of his voice on a tape recorder. He then plays back this recording and records it again through a microphone connected to a recording and playback device. The new recording is played back and recorded again. This process is repeated until, through re-recording, the words become completely indistinguishable, replaced by acoustic distortions of the sound frequencies characteristic of the space where the recording is made. The text, consisting of several sentences spoken by the author, describes the entire process of the sound installation, beginning with the words: “I am sitting in a room different from the one you are in now. I am recording the sound of my speaking voice…” and goes on to predict what will eventually happen to the recording of his voice in this act of repetition.

Thus, perhaps the observations outlined in this text lead to the following thesis: today, the complex architectures of planetary-scale computer systems and the equally complex algorithms governing interactions with the traces of collective knowledge stored within them—where the apex of this project is artificial intelligence—function not just as means of access (medium) but also as institutions of meaning and image production. The computational fusion of information into meaning, the synthetic cognitive capabilities of artificial intelligence, and the logistics of planetary interconnectedness not only change the way knowledge is produced and how those engaged with it interact but also influence the very fundamental ontology of concepts—what is what.

However, as an artist first and foremost, I would like to exercise the validity of this idea through my own work, curiously titled 1&∞🪑.

1&∞🪑

In this experiment, aimed at generating several hundred images of chairs, I turned to the popular text-to-image AI model, Stable Diffusion. The prompt used was: “one chair on a neutral background.” The resulting set of predominantly photorealistic images was then used for fine-tuning the same model, thereby predictably enhancing its ability to reproduce “a chair on a neutral background” across the entire reproducible diversity of this image.

Ègor Kraft, 1&∞🪑 (2023), video stills. Selected chair images from the 1st and 2nd iterations of training and image generation in the creation of the work using a text-to-image AI model.

This process of retraining the model on its own AI-generated images was repeated over and over—until, by the sixth iteration, instead of the photorealistic depiction of a chair that the neural network had so masterfully generated in the first phase, the model had degraded to such an extent that it produced only bright, colorful blotches bearing no resemblance to any image of a chair, instead resembling an awkward digital imitation of Mark Rothko’s paintings. In other words, the process continued until the figurative image of the chair had completely disappeared from the AI model’s representation of it.

It did not take long for the advanced Stable Diffusion model—widely regarded today as a triumphant product of applied computer vision engineering—to forget the trivial image of a chair through the process of studying its own interpretations of it.

In data science, the phenomenon in which AI is trained on data generated by other AI models is often referred to as data cannibalism. Due to the increasing demand for expanding datasets and the growing prevalence of AI-generated images and data, more and more new artificial intelligence systems will be trained on synthetic datasets—those synthesized by other generative AI models. This phenomenon introduces complications in this applied ontology and contaminates future datasets, impacting the epistemological and visual accuracy of models.

Could our AI models, as a result of this ongoing and accelerating synthesis of all data, ultimately lose their primary function of precise representation? Or, at the very least, could they blur the accuracy gained from studying representations of real objects by diluting it with the synthetic noise of perceptual degradation? The 1&∞🪑 experiment demonstrated how, under the influence of such feedback loops and the effect of algorithmic echo chambers of self-generated and self-consumed data, the domain ontology of an object and its visual representation disintegrate into non-figurative abstraction. At least, that’s how it appears—to the human eye.

However, is the image of the chair still visible to the machine in its final phase, where we can no longer see it? As observed in examples involving dog faces, plywood sheets, wood grain patterns, and muffins, both humans and machines can either perceive something that isn’t actually represented or fail to recognize what is plainly visible. And yet, does the machine still see a chair within the applied ontology of computational systems?

This is a crucial question because if the machine does see it, then, according to the structure of its applied ontology, it exists. Moreover, the chair becomes an executable object—like a .exe file, a line of code, or a prompt request. According to Tom Gruber, if something is represented, then it “exists.” This suggests that in an era of pervasive industrialization and planetary-scale computation, such failures can have tangible, real-world consequences beyond the illusory nature of their non-objective form.

Film One & Infinite Chairs

REFERENCES

- Gruber, T. R. A Translation Approach to Portable Ontology Specifications, 1993. URL: https://www.sci-hub.ru/10.1006/knac.1993.1008?ysclid=m2f3getglh617102346.

- Full text: “I am sitting in a room different from the one you are in now. I am recording the sound of my speaking voice and I am going to play it back into the room again and again until the resonant frequencies of the room reinforce themselves so that any semblance of my speech, with perhaps the exception of rhythm, is destroyed. What you will hear then are the natural resonant frequencies of the room articulated by speech. I regard this activity not so much as a demonstration of a physical fact, but more as a way to smooth out any irregularities my speech might have.” URL: https://www.youtube.com/watch?v=YUIPK8CWxpw&t=1423s.

Studio Addresses

Ikejiri, Setagaya, Tokyo, Japan

Neubau, Vienna, Austria

Studio

E. G. Kraft – artist-researcher, founder

Anna Kraft – researcher, director

Contact:

mail[at]kraft.studio

Studio Addresses

Ikejiri, Setagaya, Tokyo, Japan

Neubau, Vienna, Austria

Contact

mail[at]kraft.studio

Studio

E. G. Kraft – artist-researcher, founder

Anna Kraft – researcher, director