Content Aware Studies

On Synthetic Historiography

Content Aware Studies

On Synthetic Historiography

Paper for International Symposium

on Machine Learning and Art

City University, Hong Kong, CHN.

Published as proceedings, 2021

Abstract

The use of machine learning in historical analysis and reproduction as a scientific tool brings to the forefront ethical questions of bias contamination in data and the automation of its analysis. Through examples of various confusing para-scientific interventions, including AI-based Voynich Manuscript decryptions and artistic investigations, such as the speculative series Content Aware Studies, this paper examines the various sides of this inquiry and its consequences. It also looks into the material repercussions of objects as synthetic documents of emerging machine-rendered history. This text also attempts to instrumentalise recent theoretical developments, such as agential realism in the analysis of computation in its advanced forms and their derivatives, including AI, its output, and its ontologies.The focus of this text is the ethical, philosophical, and historical challenges we face when using such automated means of knowledge production and investigation, and what epistemics such methodologies hold by uncovering deeper and sharply unexpected newknowledge instead of masking unacknowledged biases. The series Content Aware Studies is one of the key case studies, as it vividly illustrates the results of machine-learning technologies as a means of automation and augmentation of historical and cultural documents, museology, and historiography, taking speculative forms of restoration not only within historical and archaeological contexts but also in contemporary applications across machine vision and sensing technics, such as LiDAR scanning. These outputs also provide a case study for critical examination through the lens of cultural sciences of potential misleading trajectories in knowledge production and epistemic focal biases that occur at the level of the applications and processes described above. Given the preoccupation with warnings and ontologies related to biases, authenticities, and materialities, we seek to vividly illustrate them. As data in this text is seen as crude material and building blocks of inherent bias, the new materialist framework helps address these notions in a non-anthropocentric way, while seeking to locate the subjects of investigations as encounters between non-organic bodies. In the optics of a non-human agency of the AI-investigator, what parts of our historical knowledge and interpretation encoded in the datasets will survive this digital digestion? How are historical narratives and documents, and their meanings and functions perverted when their analysis is outsourced to machine vision and cognition? In other words, what happens to historical knowledge and documentation in the age of information-production epidemics and computational reality-engineering?

Hashterms

A form of parahistorical investigative practice in which gaps in our knowledge of the past are studied and filled in using machine learning and generative AI techniques involving historical archive datasets.

While pareidolia is the tendency to perceive meaningful images or patterns where none actually exist. Cyberdolia is a similar misinterpretation occurring in machine vision contexts.

Historical findings and narratives resulting from reverse archaeology and other algorithmic methodologies.

It relates to technological literacy in the same way that one relates to spiritual or mystical knowledge.

Relates to aesthetic forms that emerge as a result of generative Ai.

Download PDF

Synthetic Histories

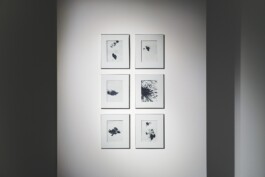

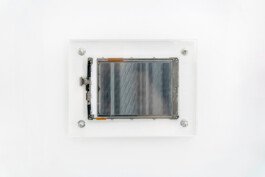

Let us start with a few key thoughts to open up speculations and thought-and-object-experiments related to history, matter, agency, and computation. History in this text is seen as data; while data is seen as crude material and a critical resource for content-form-knowledge production, through which we attempt to raise questions of origin and genuineness. How do we view historical objects as documents; and how are such seemingly embedded properties as provenance or authenticity viewed when observed and interpreted through the lens of machine vision. And why ‘lens’ is not a good metaphor for better collective understanding of machine vision. Perhaps, the central question is what are the ethical and scientific challenges we are confronting with when it comes to using such automated means of production and investigation. These questions are asked in relation to synthetic forms of knowledge production as results of processing historical archives via machine-learning models materialised via automated fabrication technics (i.e. 3D-printing, CNC, etc). They inquire about the capacities and consequences of such machine-learning technologies as a means of automated historical investigation, and question whether AI-rendered findings are still historical. Can AI-led investigations allow us to uncover deeper and sharply unexpected new knowledge, or do they mask unacknowledged biases? As part of this investigation, let’s look into the collaborative artistic intervention, as a case study that seeks to establish the methodology of investigating these machine-learning capacities. The research examines how various modern AI models, including SDF and other diffusion models and General Adversarial Networks (GANs) which are particularly known for their advances in computer vision and hyperrealistic image rendering, operate when trained on datasets consisting of thousands of 3D scans from renowned international museum collections. Custom trained neural network models are directed to replenish lost fragments of classical friezes and sculptures and thus generate previously never-existing objects of classical antiquity. The algorithm generates results convertible into 3D models, which are then 3D-printed or CNC-ed and used to fill the voids of the original sculptures or turned into entirely new machine-fabricated marble or synthetic objects, faithfully restoring original forms, while also producing bizarre errors and algorithmic misinterpretations of Hellenistic and Roman art, which are then embodied in machine-carved stone blocks. Some of these blocks are thousand-year-old, just like those original Hellenistic sculptures were made from. Which allows for an interesting juxtaposition, given that both original and Ai-derived antiquities are materially the same, even though the latter is rendered via automated synthetic cognition and production. This series of works is used as a case study for critical examination of potentially misleading trajectories in knowledge production and epistemic focal biases that occur at the level of these hybrid experiments. It is inspired by real examples, where similar AI techniques are being ubiquitously instrumentalized, as seen across investigations of historical documents, including the Voynich Manuscript (Artnet 2018), a collaboration between the British Library and the Turing Institute and other similar projects. However, before celebrating such advances, we might as well first critically examine the role of such forms of knowledge production; how does one distinguish between accelerated forms of empirical investigation and algorithmic bias? Will the question hold up if this is the new normal of historiography? To what degree can machine-learning-based approaches help us augment our methods of analysis as opposed to poisoning our empirical methodologies with synthetic bias, a product of machinic, or even non-human agency? How far should we consider an algorithm as a tool to study with, vs. an inevitable force that will change how and what we study to begin with? The questions are not new for media theory, and neither they are for anthropology. Research at Emory University, led by anthropologist Dietrich Stout, suggests that the process of making tools changed human neurology. Stout claims⁷ that neural circuits of the brain underwent changes to adapt to Palaeolithic toolmaking, thus playing a key role in primitive forms of communication (Stout 2016, 28–35). Projecting these dynamics onto various forms of computational information manipulation techniques, we may speculate that these tools, as forms of knowledge production, may unpack new latent languages and possibilities contained within our minds. We think that we know how we think, but machines that think, might know it differently.

Perhaps, to demystify the notion of algorithm and the nature of biases, it may be helpful to view them through the lens of recent theoretical developments, referred to as new materialism or the ontological turn. To do so, let us acknowledge the ever-present entanglement of forces and complex dynamics as a fundamental condition occurring between a multitude of agencies via their material-discursive apparatuses (as described by Karen Barad in Agential Realism: On the Importance of Material-Discursive Practices)¹. This theoretical model is particularly useful to us if we acknowledge that the phenomenon of computation itself is essentially possible through the entanglement of matter and meaning, so it is not only a project of applied sciences, but also a vividly onto-epistemological notion. In other words, computers are materially programmable apparatuses that enable knowledge production and logistics; made from rare and common earth materials, computers are very efficient in the continued scaling of these programmability and logistics of information.

However, let us suggest that the very principle of computation itself is more a discovery than an invention. The repeatable, verifiable statements which are the hallmark of mathematics and forms of early computing have existed for thousands of years, and across multiple civilizations. Observing the global expansion of modern computational infrastructure, including transoceanic internet cables, supercomputers, huge data centres, one can argue that it is a radical growing development redesigning the relationship between matter and information on a planetary scale.

We all know how pop culture misleadingly depicted AI in endowing it with extremely anthropomorphised agency – the ghost in the machine – both matter and intelligence in one body; However, if we look closely at AI’s material embodiment, it is a lot more similar to flora, then say fauna or at the very least a cyborg Terminator, the model T-1000 as depicted in Terminator 2: Judgment Day. The latter, of course, illustrates our fears of Ai, well described by Benjamin Bratton as Copernicus Trauma. These fears are well-encompassed by the AI computer HAL, in Kubrick’s well-known motion picture, which in response to the human command to “Open the pod bay doors” answers: “I’m afraid I can’t do that, Dave.”

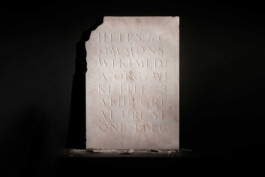

Hylomorphism and Materiality

Materiality has reappeared as a highly contested topic, not only in recent philosophy and media studies but also in recent art. Modernist criticism tended to privilege form over matter, considering the material as the essentialized basis of medium specificity, and technically based approaches in art history reinforced connoisseurship through the science of artistic materials. But in order to engage critically with materiality in the post-digital era, the time of big data and automation, we may require a more advanced set of methodological tools. Let us address digital infrastructure as entirely physical, and thus re-examine how they are commonly described as “immaterial.” If we acknowledge that data itself is not immaterial, but a generative product of complex infrastructures, including magnetic materials and associated physical responses of electron magnetic dipole moments, hosting it, data centres, Wi-Fi, low-frequency radio signals, transatlantic cables, and satellites amongst other elements, we may view a global network of computational apparatuses, its software and hardware as a planetary conveyor belt producing and handling data. To develop this argument further, we turn to the aforementioned instruments of new materialist critique. We may approach this by addressing materialist critiques of artistic production, surveying the relationships between matter and bodies, exploring the “vitality” of substances, and looking closely at the concepts of inter-materiality and trans-materiality emerging in the hybrid zones of digital experimentation. Building on Bennett's notion of vital and vibrant matters², an understanding of expanding universes between objects comes into play, which leads us to ask: What are the understandings of agency between matters, the dynamics between inhuman objects undefined by human intervention? We used to think of artistic work as a process of turning formless materials into intelligible forms, i.e., paint into a painting, clay into a sculpture, and data into a model. These ways of thinking about forms and being referred back to Aristotle's term –hylomorphism. However, does this assumption of matter and capacity still hold after developments in digital infrastructure, media theory, Quantum Physics, and the Entanglement of Matter and Meaning, as Karen Barad put it in her book title? The aforementioned social theory developments of agential realism, affect theory, and new materialism provide us with new deterministic methods. In the words of Bruce Miranda, “New materialism tries not to have a set of maxims, but as a whole, it does emphasise a non-anthropocentric approach. This means it doesn’t just pay attention to other organic lifeforms – but also non-organic ontology and agency. It focuses on how all kinds of matter are an organising and agential part of existence” (Bruce 2014). From the New Materialist point of view, the meeting of clay and sculptor is actually an encounter between non-inert material bodies, each with their own agency and capacities. Perhaps the reverse-archaeology artistic series provide a good case study for the overwhelming complexities of new materialist dynamics, as opposed to holomorphic relationships, where the authorship of sculptures is equally (or not) distributed between the StyleGAN algorithm, the contents of the datasets, classical sculptors, CNC router machines, 3d printers and finally the artist. The agency of the author has somewhat dissolved within the thingness of the things, as follows:A motor-driven spinning end mill of a five-axis CNC machine under a water coolant jet stream encounters a marble block composed of recrystallized carbonate minerals to shape it into a form defined through the process of an encounter of a dataset consisting of 3d-scanned historical documents; encoded as collections of 3-dimensional model files; converted into binary files to be processed by computational algorithms, based on mathematical equations describing multidimensional vector space, enabled via a multi-layered software stack, which triggers electric signals across semiconductor-microchips of a GPU-accelerated server within computer-clusters, which processes and routes millions of electric signals and request-response operations across its RAM, CPU, GPU, VRAM solid-state drives, hard disks and other hardware components. Once physical, the marble output is met with various nitric acid solutions, with each layer adding centuries of age. Hardware, software, and data here are active authors and creators of objects, no longer merely tools. One can look at any sculpture of the series as the embodiment of new materiality, illustrating how materials and meanings confront, violate, or interfere with common standards as mediators within entanglements of processes, but any other object would also pass. Approaching one of the sculptures embodying a portrait of Roman empress Julia Mamea, we see the marble bust of a woman, but when seen from all sides, the portrait turns out to be an uncanny-distorted amalgamation of glitches in the gap between the acid-aged marble. In place of where human gut feeling would tell us to expect an ear or a cheekbone, the polyamide inlay depicts multiple eyes rippling along the side of her face. Is then an archive of such objects now a museum of synthetic history, filled with documents of algorithmic prejudice?

Fig. 1. CAS_05_Julia Mamea, 2019, Egor Kraft, marble, / polyamide, Copyright by Egor Kraft.

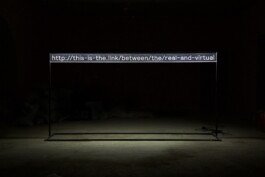

Predispositions by Design

Preoccupied with these warnings and ontologies of biases, the series examines what visual and aesthetic qualities for such guises are conveyed when rendered by a synthetic agency and perceived through our anthropocentric lens. What of our historical knowledge and interpretation encoded in the datasets will survive this digital digestion? Having previously established the notion of machine-generated history, let us now unpack its problematics. The current research by the British Library and the Turing Institute is directed at using AI to analyse large, digitized collections “to provide new insights into the human impact of the Industrial Revolution.” Such intervention poses the question of to what degree we may and should accept machine-analysis of archival data-based deliverables as a ground for truth when aiming for historical reconstructions. The sculptural voids that the project aims to resolve are also the information least represented in the dataset, particularly noses, fingers, chins, and extremities are lost because of their fragile nature, causing further misrepresentations. Is this not also true for the above? We must acknowledge blind spots in the data: history, pre-saturated with one-sided narratives, misinterpretations, and accounts written by the victors of conflicts. For the sake of precision in arguments, it needs to be mentioned that it is not only data introducing bias, but also algorithms, their architecture, and the parameters of operations, including the number of training epochs and floating-point precision format. The latter is a binary floating-point computer number format that describes training accuracy: FP16 stands for half-precision, while FP32 provides a wider dynamic range in handling data and thus delivering output. The aforementioned example of AI-led decryption of the Voynich manuscript, a 240-page illustrated ancient book purchased in 1912 by a Polish book dealer, containing botanical drawings, celestial diagrams, and naked female figures, all described in an unknown script and an unknown language, which no one has been able to interpret so far. In early 2018, computer scientists at the University of Alberta claimed to have deciphered the inscrutable handwritten 15th-century codex, which had baffled cryptologists, historians, and linguists for decades⁴, stymied by the seemingly unbreakable code. It became a subject of conspiracy theories, claiming it had extraterrestrial origins or that it was a medieval prank without hidden meaning. But using natural language processing machine-learning techniques, over 80 percent of the words have been found in a Hebrew dictionary. However, these assumptions have met harsh scepticism outside the computer-scientist community. AI might approach problems as puzzles, which it tries to solve by brute force, even if the sum of the pieces is incomplete, and even more so, gleaned from other puzzles. In other words, it is unlikely that AI will see beyond the subject it was trained to see. Instead, it will make sure to find that very subject regardless of whether it’s there or not: from the plate of spaghetti and meatballs hallucinating a hellscape of dog faces on a Deep Dream trip2 to how a residual neural network reveals an alarming resemblance shared between chihuahuas and muffins³, and finally, how AI deciphered the Voynich Manuscript. Let’s look at the AI-revisited Lumière brothers' 1895 film 'Arrival of a Train at La Ciotat', which has been upscaled to blazing 4K resolution and streamlined at 60 frames per second, with colour added. It messes with our understanding of the age of the material by actively triggering and confusing our code-reading of aesthetic references. This recently re-rendered tape comes across as a confusingly uncanny, yet still somewhat archival footage; The high-definition aspect places it in the post-digital realm, perverting the age of the original recording. Second, the high frame rate of 60 frames per second lean further towards this perversion, rendering it to be read as if it were from the second decade of the 21st century, as 60fps had become a common standard. The final augmentation occurs through the introduction of colour to the original black-and-white footage, which because of its desaturated hues, confusingly imitates 1960s-aged materials. So the augmentations performed by the machine-learning algorithms rip the footage out of time, leaving us with a Frankenstein-like archival document. We are confronted with augmented pixels, synthetic colour, and a confusing timestamp bias, which leaves us wondering in what way this footage remains archive material.

Fig. 2. Deep Dream Chiuahua, unknown artist: https://www.topbots.com/chihuahua-muffin-searching-bestcomputer-vision-api/

Historical Investigations at Blazing Ultra Resolution

Perhaps to speculate on potential design changes in policies related to AI-led investigations; and in response to questions about the changing nature of historical objects through their interaction with computational interventions, it may be helpful to analyse the responses that took place within Archeoinformatics, as it became “firmly and irreversibly digitized” throughout the '90s and early 2000s. There we can observe changes in policy regarding research methods as a reaction to their computational evolution. We witnessed the rise of international and domestic laws, answering calls to protect cultural heritage, data ethics, and personal information in historical archives⁵. Recording archaeological data became less about creating exact digital copies, and more about preserving an exact record of how excavators interacted with the observed object. Looking at this evolution within Archaeoinformatics, we might ponder the possibilities of record-keeping as a method of addressing ethical concerns and questions of biases in historical knowledge production. But LiDAR scans of excavation sites are acts of machine observation, with humans in this equation still holding the reins of moral responsibility. This method of additional documentation may be somewhat similar to the classification of supervised and unsupervised machine-learning. In the former, humans still play a supervisory role, as they do in the case of these archaeology examples. But what of unsupervised machine learning? Earlier in the text, we touched upon one of the pillars for AGI emergence, which is that it needs pre-programmed means for self-awareness in order to account for its own bias. In the concluding thoughts, we can speculate that until machine cognition systems are trained to recognise themselves, AI as the lead investigator is doomed to fail to account for its own agency, which, according to our case study, has lasting repercussions. The thoughts expanded in this paper do not provide solutions; rather, they point towards alarming outcomes if the outlined complexities are disregarded. They acknowledge that the nature of these complexities lies within the notion of the computational phenomenon itself, or more specifically, its onto-epistemological capacity, materiality, and programmability. We may address growing concerns about some possible scenarios in the future in which its past will be largely augmented by automated versions of AI-investigators to which it was ingenuously outsourced. Hence, whilst the evolution of scientific tools is, in fact, “a good thing,” it is alarmingly crucial to continuously highlight that this progress not only fails to eliminate existing biases but likely amplifies them. Thus, awareness of these biases has to be kept at the forefront of conversations and the design of tools, so that we do not succumb to a naive fantasy that historical-detective-virtual- assistant-led research may be the way towards historical investigations at blazing ultra-resolution.

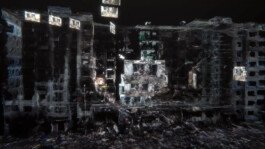

Fig. 3. Snapshot from GAN-generated latent space walk video from the CAS series, 2019, Egor Kraft.

References

1. Karen Barad. 2007. Meeting the universe halfway: Quantum physics and the entanglement of matter and meaning. Duke University Press. back to text ⮢

2. Jane Bennett. 2020. Vibrant matter: A political ecology of things. Duke University Press. back to text ⮢

3. Eric Hahn. 2022. Saving Cinema: Circulation and Preservation in the Age of Computational Film. University of California, Irvine. back to text ⮢

4. Jennifer Pascoe. 2018. Using AI to uncover ancient mysteries. University of Alberta 25 (2018). back to text ⮢

5. Lorna-Jane Richardson. 2018. Ethical challenges in digital public archaeology. Journal of Computer Applications in Archaeology 1, 1 (2018), 64–73. back to text ⮢

6. Christopher H Roosevelt, Peter Cobb, Emanuel Moss, Brandon R Olson, and Sinan Ünlüsoy. 2015. Excavation is destruction digitization: advances in archaeological practice. Journal of Field Archaeology 40, 3 (2015), 325–346.

7. Dietrich Stout. 2016. Tales of a Stone Age Neuroscientist. Scientific American 314, 4 (2016), 28–35. back to text ⮢

Content Aware Studies

On Synthetic Historiography

Paper for International Symposium

on Machine Learning and Art

City University, Hong Kong, CHN.

Published as proceedings, 2021

Hashterms

Download PDF

A form of parahistorical investigative practice in which gaps in our knowledge of the past are studied and filled in using machine learning and generative AI techniques involving historical archive datasets.

While pareidolia is the tendency to perceive meaningful images or patterns where none actually exist. Cyberdolia is a similar misinterpretation occurring in machine vision contexts.

Historical findings and narratives resulting from reverse archaeology and other algorithmic methodologies.

It relates to technological literacy in the same way that one relates to spiritual or mystical knowledge.

Relates to aesthetic forms that emerge as a result of generative Ai.

Abstract

The use of machine learning in historical analysis and reproduction as a scientific tool brings to the forefront ethical questions of bias contamination in data and the automation of its analysis. Through examples of various confusing para-scientific interventions, including AI-based Voynich Manuscript decryptions and artistic investigations, such as the speculative series Content Aware Studies, this paper examines the various sides of this inquiry and its consequences. It also looks into the material repercussions of objects as synthetic documents of emerging machine-rendered history. This text also attempts to instrumentalise recent theoretical developments, such as agential realism in the analysis of computation in its advanced forms and their derivatives, including AI, its output, and its ontologies.The focus of this text is the ethical, philosophical, and historical challenges we face when using such automated means of knowledge production and investigation, and what epistemics such methodologies hold by uncovering deeper and sharply unexpected newknowledge instead of masking unacknowledged biases. The series Content Aware Studies is one of the key case studies, as it vividly illustrates the results of machine-learning technologies as a means of automation and augmentation of historical and cultural documents, museology, and historiography, taking speculative forms of restoration not only within historical and archaeological contexts but also in contemporary applications across machine vision and sensing technics, such as LiDAR scanning. These outputs also provide a case study for critical examination through the lens of cultural sciences of potential misleading trajectories in knowledge production and epistemic focal biases that occur at the level of the applications and processes described above. Given the preoccupation with warnings and ontologies related to biases, authenticities, and materialities, we seek to vividly illustrate them. As data in this text is seen as crude material and building blocks of inherent bias, the new materialist framework helps address these notions in a non-anthropocentric way, while seeking to locate the subjects of investigations as encounters between non-organic bodies. In the optics of a non-human agency of the AI-investigator, what parts of our historical knowledge and interpretation encoded in the datasets will survive this digital digestion? How are historical narratives and documents, and their meanings and functions perverted when their analysis is outsourced to machine vision and cognition? In other words, what happens to historical knowledge and documentation in the age of information-production epidemics and computational reality-engineering?

Synthetic Histories

Let us start with a few key thoughts to open up speculations and thought-and-object-experiments related to history, matter, agency, and computation. History in this text is seen as data; while data is seen as crude material and a critical resource for content-form-knowledge production, through which we attempt to raise questions of origin and genuineness. How do we view historical objects as documents; and how are such seemingly embedded properties as provenance or authenticity viewed when observed and interpreted through the lens of machine vision. And why ‘lens’ is not a good metaphor for better collective understanding of machine vision. Perhaps, the central question is what are the ethical and scientific challenges we are confronting with when it comes to using such automated means of production and investigation. These questions are asked in relation to synthetic forms of knowledge production as results of processing historical archives via machine-learning models materialised via automated fabrication technics (i.e. 3D-printing, CNC, etc). They inquire about the capacities and consequences of such machine-learning technologies as a means of automated historical investigation, and question whether AI-rendered findings are still historical. Can AI-led investigations allow us to uncover deeper and sharply unexpected new knowledge, or do they mask unacknowledged biases? As part of this investigation, let’s look into the collaborative artistic intervention, as a case study that seeks to establish the methodology of investigating these machine-learning capacities. The research examines how various modern AI models, including SDF and other diffusion models and General Adversarial Networks (GANs) which are particularly known for their advances in computer vision and hyperrealistic image rendering, operate when trained on datasets consisting of thousands of 3D scans from renowned international museum collections. Custom trained neural network models are directed to replenish lost fragments of classical friezes and sculptures and thus generate previously never-existing objects of classical antiquity. The algorithm generates results convertible into 3D models, which are then 3D-printed or CNC-ed and used to fill the voids of the original sculptures or turned into entirely new machine-fabricated marble or synthetic objects, faithfully restoring original forms, while also producing bizarre errors and algorithmic misinterpretations of Hellenistic and Roman art, which are then embodied in machine-carved stone blocks. Some of these blocks are thousand-year-old, just like those original Hellenistic sculptures were made from. Which allows for an interesting juxtaposition, given that both original and Ai-derived antiquities are materially the same, even though the latter is rendered via automated synthetic cognition and production. This series of works is used as a case study for critical examination of potentially misleading trajectories in knowledge production and epistemic focal biases that occur at the level of these hybrid experiments. It is inspired by real examples, where similar AI techniques are being ubiquitously instrumentalized, as seen across investigations of historical documents, including the Voynich Manuscript (Artnet 2018), a collaboration between the British Library and the Turing Institute and other similar projects. However, before celebrating such advances, we might as well first critically examine the role of such forms of knowledge production; how does one distinguish between accelerated forms of empirical investigation and algorithmic bias? Will the question hold up if this is the new normal of historiography? To what degree can machine-learning-based approaches help us augment our methods of analysis as opposed to poisoning our empirical methodologies with synthetic bias, a product of machinic, or even non-human agency? How far should we consider an algorithm as a tool to study with, vs. an inevitable force that will change how and what we study to begin with? The questions are not new for media theory, and neither they are for anthropology. Research at Emory University, led by anthropologist Dietrich Stout, suggests that the process of making tools changed human neurology. Stout claims⁷ that neural circuits of the brain underwent changes to adapt to Palaeolithic toolmaking, thus playing a key role in primitive forms of communication (Stout 2016, 28–35). Projecting these dynamics onto various forms of computational information manipulation techniques, we may speculate that these tools, as forms of knowledge production, may unpack new latent languages and possibilities contained within our minds. We think that we know how we think, but machines that think, might know it differently.

Perhaps, to demystify the notion of algorithm and the nature of biases, it may be helpful to view them through the lens of recent theoretical developments, referred to as new materialism or the ontological turn. To do so, let us acknowledge the ever-present entanglement of forces and complex dynamics as a fundamental condition occurring between a multitude of agencies via their material-discursive apparatuses (as described by Karen Barad in Agential Realism: On the Importance of Material-Discursive Practices)¹. This theoretical model is particularly useful to us if we acknowledge that the phenomenon of computation itself is essentially possible through the entanglement of matter and meaning, so it is not only a project of applied sciences, but also a vividly onto-epistemological notion. In other words, computers are materially programmable apparatuses that enable knowledge production and logistics; made from rare and common earth materials, computers are very efficient in the continued scaling of these programmability and logistics of information.

However, let us suggest that the very principle of computation itself is more a discovery than an invention. The repeatable, verifiable statements which are the hallmark of mathematics and forms of early computing have existed for thousands of years, and across multiple civilizations. Observing the global expansion of modern computational infrastructure, including transoceanic internet cables, supercomputers, huge data centres, one can argue that it is a radical growing development redesigning the relationship between matter and information on a planetary scale.

We all know how pop culture misleadingly depicted AI in endowing it with extremely anthropomorphised agency – the ghost in the machine – both matter and intelligence in one body; However, if we look closely at AI’s material embodiment, it is a lot more similar to flora, then say fauna or at the very least a cyborg Terminator, the model T-1000 as depicted in Terminator 2: Judgment Day. The latter, of course, illustrates our fears of Ai, well described by Benjamin Bratton as Copernicus Trauma. These fears are well-encompassed by the AI computer HAL, in Kubrick’s well-known motion picture, which in response to the human command to “Open the pod bay doors” answers: “I’m afraid I can’t do that, Dave.”

Hylomorphism and Materiality

Materiality has reappeared as a highly contested topic, not only in recent philosophy and media studies but also in recent art. Modernist criticism tended to privilege form over matter, considering the material as the essentialized basis of medium specificity, and technically based approaches in art history reinforced connoisseurship through the science of artistic materials. But in order to engage critically with materiality in the post-digital era, the time of big data and automation, we may require a more advanced set of methodological tools. Let us address digital infrastructure as entirely physical, and thus re-examine how they are commonly described as “immaterial.” If we acknowledge that data itself is not immaterial, but a generative product of complex infrastructures, including magnetic materials and associated physical responses of electron magnetic dipole moments, hosting it, data centres, Wi-Fi, low-frequency radio signals, transatlantic cables, and satellites amongst other elements, we may view a global network of computational apparatuses, its software and hardware as a planetary conveyor belt producing and handling data. To develop this argument further, we turn to the aforementioned instruments of new materialist critique. We may approach this by addressing materialist critiques of artistic production, surveying the relationships between matter and bodies, exploring the “vitality” of substances, and looking closely at the concepts of inter-materiality and trans-materiality emerging in the hybrid zones of digital experimentation. Building on Bennett's notion of vital and vibrant matters², an understanding of expanding universes between objects comes into play, which leads us to ask: What are the understandings of agency between matters, the dynamics between inhuman objects undefined by human intervention? We used to think of artistic work as a process of turning formless materials into intelligible forms, i.e., paint into a painting, clay into a sculpture, and data into a model. These ways of thinking about forms and being referred back to Aristotle's term –hylomorphism. However, does this assumption of matter and capacity still hold after developments in digital infrastructure, media theory, Quantum Physics, and the Entanglement of Matter and Meaning, as Karen Barad put it in her book title? The aforementioned social theory developments of agential realism, affect theory, and new materialism provide us with new deterministic methods. In the words of Bruce Miranda, “New materialism tries not to have a set of maxims, but as a whole, it does emphasise a non-anthropocentric approach. This means it doesn’t just pay attention to other organic lifeforms – but also non-organic ontology and agency. It focuses on how all kinds of matter are an organising and agential part of existence” (Bruce 2014). From the New Materialist point of view, the meeting of clay and sculptor is actually an encounter between non-inert material bodies, each with their own agency and capacities. Perhaps the reverse-archaeology artistic series provide a good case study for the overwhelming complexities of new materialist dynamics, as opposed to holomorphic relationships, where the authorship of sculptures is equally (or not) distributed between the StyleGAN algorithm, the contents of the datasets, classical sculptors, CNC router machines, 3d printers and finally the artist. The agency of the author has somewhat dissolved within the thingness of the things, as follows:A motor-driven spinning end mill of a five-axis CNC machine under a water coolant jet stream encounters a marble block composed of recrystallized carbonate minerals to shape it into a form defined through the process of an encounter of a dataset consisting of 3d-scanned historical documents; encoded as collections of 3-dimensional model files; converted into binary files to be processed by computational algorithms, based on mathematical equations describing multidimensional vector space, enabled via a multi-layered software stack, which triggers electric signals across semiconductor-microchips of a GPU-accelerated server within computer-clusters, which processes and routes millions of electric signals and request-response operations across its RAM, CPU, GPU, VRAM solid-state drives, hard disks and other hardware components. Once physical, the marble output is met with various nitric acid solutions, with each layer adding centuries of age. Hardware, software, and data here are active authors and creators of objects, no longer merely tools. One can look at any sculpture of the series as the embodiment of new materiality, illustrating how materials and meanings confront, violate, or interfere with common standards as mediators within entanglements of processes, but any other object would also pass. Approaching one of the sculptures embodying a portrait of Roman empress Julia Mamea, we see the marble bust of a woman, but when seen from all sides, the portrait turns out to be an uncanny-distorted amalgamation of glitches in the gap between the acid-aged marble. In place of where human gut feeling would tell us to expect an ear or a cheekbone, the polyamide inlay depicts multiple eyes rippling along the side of her face. Is then an archive of such objects now a museum of synthetic history, filled with documents of algorithmic prejudice?

Fig. 1. CAS_05_Julia Mamea, 2019, Egor Kraft, marble, / polyamide, Copyright by Egor Kraft.

Predispositions by Design

Preoccupied with these warnings and ontologies of biases, the series examines what visual and aesthetic qualities for such guises are conveyed when rendered by a synthetic agency and perceived through our anthropocentric lens. What of our historical knowledge and interpretation encoded in the datasets will survive this digital digestion? Having previously established the notion of machine-generated history, let us now unpack its problematics. The current research by the British Library and the Turing Institute is directed at using AI to analyse large, digitized collections “to provide new insights into the human impact of the Industrial Revolution.” Such intervention poses the question of to what degree we may and should accept machine-analysis of archival data-based deliverables as a ground for truth when aiming for historical reconstructions. The sculptural voids that the project aims to resolve are also the information least represented in the dataset, particularly noses, fingers, chins, and extremities are lost because of their fragile nature, causing further misrepresentations. Is this not also true for the above? We must acknowledge blind spots in the data: history, pre-saturated with one-sided narratives, misinterpretations, and accounts written by the victors of conflicts. For the sake of precision in arguments, it needs to be mentioned that it is not only data introducing bias, but also algorithms, their architecture, and the parameters of operations, including the number of training epochs and floating-point precision format. The latter is a binary floating-point computer number format that describes training accuracy: FP16 stands for half-precision, while FP32 provides a wider dynamic range in handling data and thus delivering output. The aforementioned example of AI-led decryption of the Voynich manuscript, a 240-page illustrated ancient book purchased in 1912 by a Polish book dealer, containing botanical drawings, celestial diagrams, and naked female figures, all described in an unknown script and an unknown language, which no one has been able to interpret so far. In early 2018, computer scientists at the University of Alberta claimed to have deciphered the inscrutable handwritten 15th-century codex, which had baffled cryptologists, historians, and linguists for decades⁴, stymied by the seemingly unbreakable code. It became a subject of conspiracy theories, claiming it had extraterrestrial origins or that it was a medieval prank without hidden meaning. But using natural language processing machine-learning techniques, over 80 percent of the words have been found in a Hebrew dictionary. However, these assumptions have met harsh scepticism outside the computer-scientist community. AI might approach problems as puzzles, which it tries to solve by brute force, even if the sum of the pieces is incomplete, and even more so, gleaned from other puzzles. In other words, it is unlikely that AI will see beyond the subject it was trained to see. Instead, it will make sure to find that very subject regardless of whether it’s there or not: from the plate of spaghetti and meatballs hallucinating a hellscape of dog faces on a Deep Dream trip2 to how a residual neural network reveals an alarming resemblance shared between chihuahuas and muffins³, and finally, how AI deciphered the Voynich Manuscript. Let’s look at the AI-revisited Lumière brothers' 1895 film 'Arrival of a Train at La Ciotat', which has been upscaled to blazing 4K resolution and streamlined at 60 frames per second, with colour added. It messes with our understanding of the age of the material by actively triggering and confusing our code-reading of aesthetic references. This recently re-rendered tape comes across as a confusingly uncanny, yet still somewhat archival footage; The high-definition aspect places it in the post-digital realm, perverting the age of the original recording. Second, the high frame rate of 60 frames per second lean further towards this perversion, rendering it to be read as if it were from the second decade of the 21st century, as 60fps had become a common standard. The final augmentation occurs through the introduction of colour to the original black-and-white footage, which because of its desaturated hues, confusingly imitates 1960s-aged materials. So the augmentations performed by the machine-learning algorithms rip the footage out of time, leaving us with a Frankenstein-like archival document. We are confronted with augmented pixels, synthetic colour, and a confusing timestamp bias, which leaves us wondering in what way this footage remains archive material.

Fig. 2. Deep Dream Chiuahua, unknown artist: https://www.topbots.com/chihuahua-muffin-searching-bestcomputer-vision-api/

Historical Investigations at Blazing Ultra Resolution

Perhaps to speculate on potential design changes in policies related to AI-led investigations; and in response to questions about the changing nature of historical objects through their interaction with computational interventions, it may be helpful to analyse the responses that took place within Archeoinformatics, as it became “firmly and irreversibly digitized” throughout the '90s and early 2000s. There we can observe changes in policy regarding research methods as a reaction to their computational evolution. We witnessed the rise of international and domestic laws, answering calls to protect cultural heritage, data ethics, and personal information in historical archives⁵. Recording archaeological data became less about creating exact digital copies, and more about preserving an exact record of how excavators interacted with the observed object. Looking at this evolution within Archaeoinformatics, we might ponder the possibilities of record-keeping as a method of addressing ethical concerns and questions of biases in historical knowledge production. But LiDAR scans of excavation sites are acts of machine observation, with humans in this equation still holding the reins of moral responsibility. This method of additional documentation may be somewhat similar to the classification of supervised and unsupervised machine-learning. In the former, humans still play a supervisory role, as they do in the case of these archaeology examples. But what of unsupervised machine learning? Earlier in the text, we touched upon one of the pillars for AGI emergence, which is that it needs pre-programmed means for self-awareness in order to account for its own bias. In the concluding thoughts, we can speculate that until machine cognition systems are trained to recognise themselves, AI as the lead investigator is doomed to fail to account for its own agency, which, according to our case study, has lasting repercussions. The thoughts expanded in this paper do not provide solutions; rather, they point towards alarming outcomes if the outlined complexities are disregarded. They acknowledge that the nature of these complexities lies within the notion of the computational phenomenon itself, or more specifically, its onto-epistemological capacity, materiality, and programmability. We may address growing concerns about some possible scenarios in the future in which its past will be largely augmented by automated versions of AI-investigators to which it was ingenuously outsourced. Hence, whilst the evolution of scientific tools is, in fact, “a good thing,” it is alarmingly crucial to continuously highlight that this progress not only fails to eliminate existing biases but likely amplifies them. Thus, awareness of these biases has to be kept at the forefront of conversations and the design of tools, so that we do not succumb to a naive fantasy that historical-detective-virtual- assistant-led research may be the way towards historical investigations at blazing ultra-resolution.

Fig. 3. Snapshot from GAN-generated latent space walk video from the CAS series, 2019, Egor Kraft.

Studio Addresses

Ikejiri, Setagaya, Tokyo, Japan

Neubau, Vienna, Austria

Studio

E. G. Kraft – artist-researcher, founder

Anna Kraft – researcher, director

Contact:

mail[at]kraft.studio

Studio Addresses

Ikejiri, Setagaya, Tokyo, Japan

Neubau, Vienna, Austria

Contact

mail[at]kraft.studio

Studio

E. G. Kraft – artist-researcher, founder

Anna Kraft – researcher, director