Neural Magazine #72 “Machine Transparency”

Interview by alessandro ludovico

1. You once said that "art is a variety of all sorts of communication forms", which perhaps also manifests your interest in semiotics. Do you conceive your artworks as a semiotic sabotage of the dominant, invasive and all-absorbing technologies?

I’m equally occupied with the semiotics, ontologies, the intersection of both and how do they materialise via the computationally accelerated condition of today's industrialisation. I'm interested in how computation enables modelling of knowledge about some domain, real or imagined; and does so via an incredibly complex human architected set of processes across matter & energy. Furthermore, these computational systems supercharge information with transmittable properties and programmability that significantly change what we do with information, as well as what it does to us. Hence, these technologies turn out to be "dominant, invasive and all-absorbing" to any other ontologies. And, yes, I definitely like to sabotage systems, I think it helps to understand and examine their borders and capacities.

2. In a way, your project "The New Color" reinforces these fields. You made an online intervention for a non-existent American chemical company (ACI) announcing "a fictitious breakthrough consisting of a previously 'undiscovered' colour" that cannot be displayed on current RGB-based screens. How did you construct the powerful narrative and corroborating data to support this example of post-truth? And what do you think are the online conditions for truth?

With 'The New Color' it developed rather spontaneously, beginning in 2008, when I put up a website of this fictitious company and their breakthrough. Perhaps, surprisingly now, but at that time we barely used such terms as 'post-truth' or 'fake news'. Since it immediately started gaining online attention, I was very interested in how far can I possibly take this absurdist idea. This motivated me to continue adding up to it, by filming ads, mockumentary interviews and leaked videos. But really, the most interesting part of the narrative was constructed by people, who in an attempt to contact the non-existent company, sent emails expressing their interest in development, cooperation offers, investment proposals, enthusiastic feedback, and bizarre rumours… later I published a book comprised of hundreds of such emails.

As for the online conditions for truth, I think, not only the conditions but also the very notion of 'truth' has been significantly challenged by information tech. We have been very successful in constructing hyper-accelerated information logistics of the internet, incredibly sophisticated image manipulation and modelling software and, of course, AI-driven forms of synthetic knowledge production, i.e. prompt-based generation of visual content. I genuinely believe that now we also need to build the tools that can compute these conditions for truth and means to expose it. It actually occupies me within a series of other projects I'm currently working on.

'The New Color' Film Trailer

3. In your acclaimed Content Aware Studies series, you restore lost fragments of sculptures from classical antiquity by scanning the damaged parts and programming machine learning algorithms to generate the missing pieces, which are then 3D printed and added. With this work, you raise a number of uncomfortable questions about speculative historical enquiry and epistemic concerns about AI in terms of its knowledge production and associated reproduction of biases. What are your experiences with all these elements in this project?

First, I was genuinely fascinated with a few recent stories where Ai driven historical research was leading to some bizarre forms of controversy. One particularly notable is where Ai researchers from Alberta University claimed that their model cracked the Voynich Manuscript, a document carbon dated to the 15th century, incomprehensive content of which baffled scientists for decades. However, their methodology was, of course, publicly doubted by other scholars. And some experts were saying that the likelihood of correctness of these Ai-derived translations of the document was considered to be close to zero.

We were fascinated with the idea of an object that is synthetically generated and machine-sculpted, while being something that contains features of a historical document at the same time. An object of synthetic history. We began with algorithmic reconstructions of missing bits across existing sculptural objects from the renowned museum collections. These speculative, algorithmic reconstructions were then machine-carved in marble to be installed, filling the voids within the original objects. Such experiments resulted in generating visually the weirdest possible interpretations of common guises of antiquity beyond the limits of human imaginations, which we imminently fell in love with. However, what I found particularly interesting was when an algorithm would output a result in a form of a 3d model visually indistinguishable from an actual portrait sculpture of that style and era. Such a model would then be machine fabricated from a block of marble, and in terms of its perceptual and perhaps also material properties it wouldn't be any different to an actual portrait sculpture. However, instead of being carved by a Greek or Roman sculptor, it was rendered via synthetic computational data manipulations of an Ai model and sculpted via precise automated operations of a CNC machinery. I believe it triggers some captivating thinking around agency, hylomorphism, the ontology of being and becoming and other metaphysics.

Another way of thinking about it would be via an example of a 4k 60fps Ai upscaled version of “Arrival of a Train at La Ciotat”, a YouTube video that was viral for some time a few years ago. I was fascinated by how many genuinely deep philosophical inquiries this public data science experiment was supercharged with. The obvious ones are: – what are these new pixels? What are these frames & colours with which the film was augmented through this Ai processing? What are they in terms of ontological notion and properties of a document? They are rendered and computed through statistic equations based on thousands of other films. As a result, we have a historical document of one of the first films ever made that is not merely upscaled but synthetically supercharged with the thousands of films made in over a century following its creation. I like to think of it as synthetic post-documental epistemics.

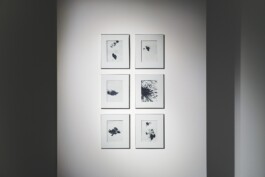

Content Aware Studies | alexanderlevy gallery, Berlin, DEU, 2019

4. Hashd0x [Proof of War] and Uncensorship Architecture are artworks that implement systems that use blockchain storage infrastructures to avoid censorship. The former stores timestamps, signature, location data and algorithmically assigned unique hash values of images or videos on such an independent structure, while the latter aims to create a safe space for journalistic data and investigative work, with examples of banned media from Russia. Do you think that an independent network of like-minded people with appropriate technology can make the infrastructural difference in such contexts, as was initially the case with Wikileaks? And in your opinion, does the concept of digital truth make sense in a medium that consists of an infinitely changeable and programmable 'fabric'?

This series of works is about storing evidence or perhaps even historically significant data on the infrastructure built for financial applications in the first place. For analogy, we may look into a known trend amongst the hacker community when a 1993 video game Doom is installed on just about anything from a DJ controller, e-book, electric piano, to a printer etc. As for Hashd0x, we are basically installing a camera app on an ATM. I think, that the immutable, robust, scalable, secure and ever-present properties of blockchains inherited from their fintech nature may turn out to be very useful for registering and storing evidence, thus helping to compute conditions for exposing truth… or at least making some progress in verifiability of data. I'm not sure about the concept of digital truth, but I'm pretty sure about the concept of the mere truth as opposed to non-truth. And again, I think, technically we can build infrastructural conditions that leverage a possibility to more effectively distinguish between facts and fiction and expand the toolset of any open-source investigation methodologies.

Hashd0x [Proof of War], alexanderlevy gallery, Berlin, DEU 2022 (c) Trewor Good

5. Another piece on the war is Decentralised Embargo, which looks at the paradoxes of the Russian-Ukrainian war: "Using energy from a german provider, which burns Russian gas to create electricity, 4-GPU completes proof-of-work in real-time for the Ethereum blockchain, thus mining Ethereum currency directly into the official cryptocurrency wallet of Ukraine".

Do you think this "computational sculpture" (as you define it), exemplarily short-circuiting the absurdity of extractivist infrastructure, exacerbates the inextricable complexity of the intertwining of capital and technology?

Indeed so, and perhaps also the hypocrisy & absurdity of today's political economy, where the global planetary scale capitalism project was aimed at building conditions for well lubricated logistics and supply of capital, labour, information, and resources. Liquidity of all stores of value was essential to it. However, it seems that other means of power, i.e. sovereign land, law, media, and military have not been part of this convertibility project. Hence, when some elements of this system become subject to a conflict, the others begin to operate in ridiculously self-deprecating ways.

Another idea is to do with the fundamental contradictions between topologies of the internet based systems vs those of territorial sovereignty of nations states. The former takes more and more functions of what historically states used to be in charge of, i.e. issuance and management of a currency, logistics, and many services. While the design of the latter has historical origins in the Westphalian Peace treaties of 1648, which set the still present today framework for international relations. The reality is, it's a pretty old-fashioned idea. I genuinely believe that some of today's political issues are exacerbated through these mismatching topologies. Also, I think the timing for building internet infrastructure at the end of the Cold War was exceptionally suitable. Imagine if the internet was to be built nowadays, it probably would have resulted into a multitude of intranets associated with superpower regimes and some highly controlled gateways or bridges in between… what a dystopia, isn't it?

Decentralised Embargo at alexanderlevy gallery, Berlin, DEU 2022 (c) Egor Kraft

6. In a similar vein, in PropaGAN you have developed a machine that uses a GAN (generative adversarial network) algorithm to generate moving images from a "dataset of explosions, smokes and cloud" in perfect synch with an interview excerpt by Sergey Lavrov in which he talks about Russia's intention to use nuclear weapons and that this has been misunderstood. From your point of view, is this conceptual feedback loop between the controversial words and the images you create possibly a method of demystifying the propaganda rhetoric? And would you call it counter-propaganda?

Conter-propaGANda – I like it. Very accurate. Agree, nothing further to add.

PropaGAN at alexanderlevy gallery, Berlin, DEU 2022 (c) Egor Kraft

7. Your film Air Kiss describes a speculative future where there is "a collectivized system in which governance is done by AI", with algorithmic decision-making and more generally, where artificial intelligence becomes ubiquitous and connects body and mind in the city into an all-encompassing system. This project seems to break the cultural trend of letting technologies regulate our lives, with the miserable predictive paradigm of AI being accepted as authority. What is the most nightmarish environment among those you have outlined? And would you agree that one of its greatest dangers is to endlessly repeat our past (with all its faults)?

I really enjoy these questions. I would absolutely agree that one of the greatest dangers lays in endlessly repeating our past and how we messed things up in it. Of course, the past would always comprise the training data, thus limiting the potentiality of the output to be a mash-up of things from the past. Another nightmarish condition would be one, in which, we will all be placed in a self-propelling narcissistic feedback loop, such that all of our experiences will be predetermined and automatically designed to feed into this endless satisfaction feedback loop. And as we succumb into this hypnotic condition we will be on a way to completely loosing every ability to critically address the system, change or calibrate it. The film ends with a scene that portrays it in a very allegorical way.

'Air Kiss' film trailer

8. In Twelve Nodes you introduce the concept of Fair Data, "a framework and guideline for organisations to control personal data" in order to achieve a Fair Data Society. In your view, is the definition of such a universal codified system a key element in the equally universal reclamation of our disintegrating boundary between the public and the private? And do you think such a society is concretely possible, given the current hyper-capitalisation of this kind of data?

I think that both information technologies and various forms of capitalisms are similar in their invasive ability to embed their own logic and dynamics within the relationships of almost anything. We've arrived to this horrifying point, in which not only they work hand in hand, but they've also become something unified and inseparable. I believe the reclamation of, as you're referring to it, "disintegrating boundary between the public and the private" IS possible and can be achieved through design & calibration. To me, the question is: who actually has the power to design it, and to what end? There's a popular idea in recent internet discourse that "Code is Law". It often suggests that transposing legal or contractual provisions into a smart contract can give rise to a participation model of code-based automatically self-enforced rules. This begs a question: who designs this code-slash-law, and to what end? The so-called decentralised and participatory politics surrounding such projects suggest that the rules of the game should be set collectively but enforced automatically. This is where comes the idea of collectively designed voluntary meta-legislation, i.e. 'Fair Data Principles'. It's not my idea, there's a community behind it. I was merely just inspired to think about it in relation to the history of law, manifested in the work 'Twelve Nodes', which essentially paraphrases the Roman Twelve Tables that stood at the foundation of European law. As at that time, one of the first instances of public display of the citizen's rights in the public & private sphere, it was achieved through installing inscribed bronze tablets at the centre of the ancient city of Rome. I'm very curious, what is a speculative future of law? Perhaps 'Twelve Nodes' is somewhat similar to my older piece 'The URL Stone' in how it questions durability, scalability and other properties of media carriers. Although in the case of Twelve Nodes, the carrier is also the law. Contra to McLuhan’s "the medium is the message", there's the quote “the messenger is the message, the medium is the law…”. I found it in Goodrich & Wan's book on 'post-law'.

'Twelve Nodes' Film Trailer

9. You also said that technological development is moving so fast that it seems we are a kind of guinea pig in a global experiment, but also that "technology is more a discovery rather than an invention", can you elaborate?

Oh. No, what I actually said was that: I genuinely believe that the phenomena of computation is rather a discovery than an invention. I refer to it as a process of manipulating the information through the collision of material and energy to execute binary encoding/decoding operations, in which there are exactly two possible states through which computing is achieved. That in itself is an incredible discovery. The rest of the infrastructure upon which it is built is definitely a technology, though. I think, I first heard this idea from Benjamin Bratton, who was my professor at Strelka. Although I'm sure he would prefer it said in his own words.

10. In the current dichotomy between deep fascination with AI being used in the humanities and rejection of its tangible dangers in this context, you position yourself by acknowledging its "complex form of bias" that is difficult to decipher and demanding that philosophers work authoritatively with it. What do you think philosophers can do to defuse the multiple forms of bias that we can already observe in the current popular generative platforms?

I don't know if philosophers necessarily can do anything about it. But perhaps the best endeavour would be for them to be more engaged in modelling and designing software. I can see some brilliant tendencies for this manifested in a few amazing think tanks and postgraduate platforms forming internationally. And I was very lucky to be part of one of those programmes. Overall, it is very tempting to work with Ai in humanities and research. It can automate a lot. But it is not a magnifying glass, if you know what I mean. I really think that the worst we can end up with, is to train it on what we have been all this time, thus making it inherently capable of imitating us. Instead, we might try to unpack on it as a form of programmable intelligence that can potentially help us understand what is it, that actually makes intelligence.

Neural - ISSUE 72

Studio Addresses

Tokyo, Mishuku, JPN

Vienna, Neubau, AUT

Studio

Egor Kraft – artist-researcher, founder

Anna Kraft – researcher, director

Downloads

Contact Details

mail/at/kraft.studio

Studio Addresses

Tokyo, Mishuku, JPN

Vienna, Neubau, AUT

Downloads

Studio

Egor Kraft – artist-researcher, founder

Anna Kraft – researcher, director